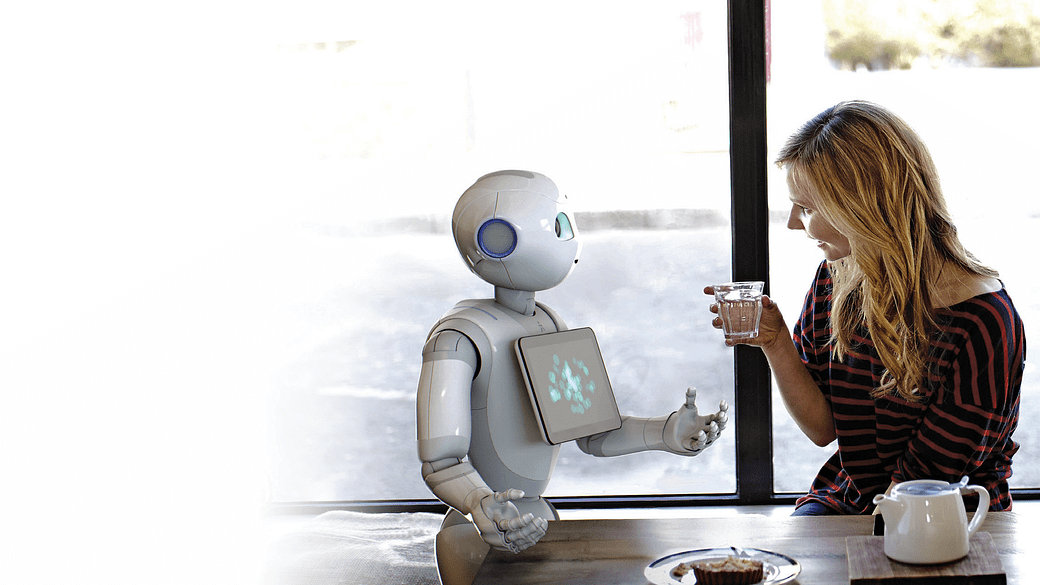

NEW YORK: Humanoids or robots are in news for taking up certain chores like in restaurants or as cleaners but researchers led by an Indian-origin scientist at the Massachusetts Institute of Technology (MIT) are now working on robots that can learn new tasks solely by observing humans.

NEW YORK: Humanoids or robots are in news for taking up certain chores like in restaurants or as cleaners but researchers led by an Indian-origin scientist at the Massachusetts Institute of Technology (MIT) are now working on robots that can learn new tasks solely by observing humans.

The team has designed a system that lets such types of robots learn complicated tasks that would otherwise hinder them with too many confusing rules.

One such task is setting a dinner table under certain conditions.

At its core, the system gives robots the human-like planning ability to simultaneously weigh many ambiguous — and potentially contradictory — requirements to reach an end goal.

In their work, the researchers compiled a dataset with information about how eight objects — a mug, glass, spoon, fork, knife, dinner plate, small plate, and bowl — could be placed on a table in various configurations.

A robotic arm first observed randomly selected human demonstrations of setting the table with the objects.

Then, the researchers tasked the arm with automatically setting a table in a specific configuration, in real-world experiments and in simulation, based on what it had seen.

To succeed, the robot had to weigh many possible placement orderings, even when items were purposely removed, stacked, or hidden.

Normally, all that would confuse robots too much.

But the researchers’ robot made no mistakes over several real-world experiments, and only a handful of mistakes over tens of thousands of simulated test runs.

“The vision is to put programming in the hands of domain experts, who can program robots through intuitive ways, rather than describing orders to an engineer to add to their code,” said first author Ankit Shah, a graduate student in the Department of Aeronautics and Astronautics (AeroAstro) and the Interactive Robotics Group.

That way, robots won’t have to perform preprogrammed tasks anymore.

“Factory workers can teach a robot to do multiple complex assembly tasks. Domestic robots can learn how to stack cabinets, load the dishwasher, or set the table from people at home,” Shah added.

Robots are fine planners in tasks with clear “specifications,” which help describe the task the robot needs to fulfill, considering its actions, environment, and end goal.

The researchers’ system, called PUnS (for Planning with Uncertain Specifications), enables a robot to hold a “belief” over a range of possible specifications.

The belief itself can then be used to dish out rewards and penalties.

“The robot is essentially hedging its bets in terms of what’s intended in a task, and takes actions that satisfy its belief, instead of us giving it a clear specification,” Shah noted.

The researchers hope to modify the system to help robots change their behaviour based on verbal instructions, corrections or a user’s assessment of the robot’s performance.

“Say a person demonstrates to a robot how to set a table at only one spot. The person may say, ‘do the same thing for all other spots,’ or, ‘place the knife before the fork here instead,'” Shah added. IANS